In the process of setting up our new environment for SAP HANA validation work, I spent some time in the data center setting up our environment, and I ran into some caveats which I figured I would share.

To set the stage, I am working with a Lenovo HX Nutanix cluster. The cluster consists of two HX-7820 appliances with 4x Intel 8180M CPU’s, 3TB RAM, NVMe, SSD and among other things two Mellanox CX-4 dual port NICs. The other two appliances are two HX-7821 with pretty much the same configuration except these systems have 6TB of RAM. The idea is to give this cluster as much performance as we can and to do that we decided to switch on Remote Direct Memory Access, also called RDMA in short.

Now, switching on RDMA isn’t that hard. Nutanix has added support for RDMA with AOS version 5.5, and according to our “one-click” mantra, it is as simple as going into our Prism web interface, clicking the gear symbol, going to “Network Configuration” and from the “Internal Interface” tab enable RDMA and put in the info about the subnet and VLAN you want to use as well as the priority number. On the switch side, you don’t need anything extremely complicated. On our Mellanox switch we did the following (note that you’d normally need to disable flow control on each port, but this is the default on Mellanox switches):

interface vlan 4000 dcb priority-flow-control enable force dcb priority-flow-control priority 3 enable interface ethernet 1/29/1 dcb priority-flow-control mode on force interface ethernet 1/29/2 dcb priority-flow-control mode on force interface ethernet 1/29/3 dcb priority-flow-control mode on force interface ethernet 1/29/4 dcb priority-flow-control mode on force

With all of that in place, you would normally expect to see a small progress bar and that is it. RDMA set up and working.

Except that it wasn’t quite as easy in our scenario…

You see, one of the current caveats is that when you image a Nutanix host with AHV, we pass through the entire PCI device, in this case the NIC, to the controller VM (cVM). The benefit is that the cVM now has exclusive access to the PCI device. The issue that arises is that we currently do not forward a single port, which isn’t ideal in the case of a NIC that has multiple ports. Add on top of that the fact that we don’t give you the choice which port to use for RDMA, and the situation becomes slightly muddied.

So, first off. We essentially do nothing more than see if we have an RDMA capable NIC, and we pass through the first one that find during the imaging process. In a normal situation, this will always the RDMA capable NIC on the PCI-slot with the lowest slot number. It will also normally be the first NIC port that we find. Meaning that if you have for example a non-RDMA capable Intel NIC in PCI slot 4, and two dual port RDMA capable cards in slot 5 and 6, your designated RDMA interface is going to be the first port on the interface in slot 5.

Since you might want to see what MAC-address is being used, you can check from the cVM by running the ifconfig command against the rdma0 interface. Note that this interface by default will exist, but isn’t online, so it will not show up if you just run an ifconfig command without parameters:

ifconfig rdma0

rdma0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet6 fe80::ee0d:9aff:fed9:1322 prefixlen 64 scopeid 0x20

ether ec:0d:9a:d9:13:22 txqueuelen 1000 (Ethernet)

RX packets 71951 bytes 6204115 (5.9 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 477 bytes 79033 (77.1 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

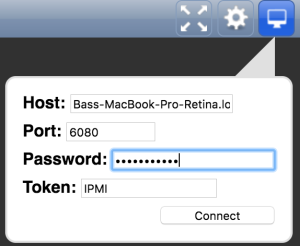

To double check if you have the correct interface connected to your switch ports my tip would be to access the lights out management interface (IMM / ILO/ iDRAC, IPMI, etc.) and check your PCI devices from there. Usually these will tell you the MAC of the interface for the various PCI devices. Make sure you double check if you are connected to the right physical NIC and switch port.

The next topic that might come up is the fact that we will automatically disable c-states on the AHV host in the process of enabling RDMA. This is all done in the background, and again normally will be done automatically. In our case, since we added a couple of new nodes to the cluster, the BIOS settings were not the same across the cluster. The result of that was that on the AHV hosts, the HX-7820 nodes had the following file available that contained a value of “1”:

/sys/devices/system/cpu/cpu*/cpuidle/state[3-4]/disable

Due to the BIOS settings that were different on the NX-7821 hosts that we added, this file and the cpuidle (sub-)directories didn’t exist on the host. While the RDMA script tried to disable c-states 3 and 4 on the hosts, this was only successful on two out of the four nodes in the cluster. Upon comparing the BIOS settings we noticed some deviations in available settings due to differences in versions, and differences in some of the settings as they were delivered to us (MWAIT for example). After modifying the settings to match the other systems, the directories were now available and we could apply the c-states to all systems.

While we obviously have some work to do to add some more resiliency and flexibility to the way we enable RDMA, and it doesn’t hurt to have an operational procedure to ensure settings are the same on all systems before going online with them, I just want to emphasize one thing:

One click on the Nutanix platform works beautifully when all systems are the same.

There are however quite a couple of caveats that come into play when you work with a heterogeneous environment/setup:

- Double check your settings at the BIOS level. Make them uniform as much as you can, but be aware of the fact that sometimes certain settings or options might not even be available or configurable anymore.

- Plan your physical layout. Try not to mix a different number of adapters per host.

- Create a physical design that can assist the people cabling with what to plug where to ensure consistency.

- You can’t always avoid making changes to a production system, but if at all possible, have a similar smaller cluster for the purpose of quality assurance.

- If you are working in a setup with a variety of systems things will hopefully work as designed but might not. Log tickets where possible, and provide info that goes a bit further than “it doesn’t work”. 😉

Oh, and one more thing. Plan extra time. The “quick” change of cables and enabling of RDMA ended up in spending 4 hours in the data center working through all of this. And that is with myself being pretty familiar with all of this. If you are new to this, again if at all possible, take your time to work through this, versus doing this on the fly and running into issues when you are supposed to be going live. 🙂